The Plan

I did not go into the project completely knowing what I was going to do, nor how I was going to do it. But I think it will be a lot easier to understand the process of getting there if you know what the end result is.

I was guided by these requirements:

- I want to be able to create many separate file systems that I can individually encrypt and grant machines access to - I knew from the get-go that I would be using LVM.

- I want the drives to be RAIDed into one big array so that management of the hardware is easy.

- I wanted the logic to put the drives into a RAID to be separate from the partitioning of the space - this satisfies my software engineer brain that thinks that these are separate duties and should be handled separately

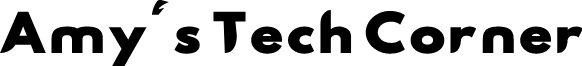

What I settled on is something like this architecture

I have my four drives combines into one array - this is managed by mdadm - There is only one physical volume and volume group. That volume group is then partitioned into logical volumes that can be formatted, encrypted and resized separately.

RAIDing the Drives

The first step was to get the drives into a RAID array. I decided to have them configured as RAID 5. This uses a minimum of three drives and has the data split across all four drives for faster read speeds but has the advantage of one fourth redundancy so if any one drive fails, I don't lose any data. I can simple reinsert a new blank drive and restore the data from the other three disks onto the fourth. Again, I got these drives off of Facebook marketplace, so I don't completely trust them to last a long time.

I'm also curious to know if I really get any performance increase from RAID 5 as I have it configured. The way my SATA hat works is that there are two SATA-to-USB bridges that plug into two USB 3.0 ports on the Pi. I suspect there could be other bottlenecks in that chain that would negate any performance enhancements I get from striping the data across the drives. I can't think of a good way to test this though, certainly not without undoing and redoing a lot of the work to configure the NAS. Let me know if you have any ideas.

My first attempt, I tried using LVM2 for the RAID and and the volume management, but I found that for one, it was tricky to configure it the way I wanted – LVM2 seemed to be built with the assumption that you would use it to software RAID several logical volumes into one, rather than several physical drives into one logical volume. Secondly, it meant that the LVM configuration was handling the RAIDing and the partitioning. It was getting messing trying to keep the logic of these two separate (at least in my brain). I settled on using mdadm to handle the RAID array exclusively, and LVM2 to partition the space exclusively.

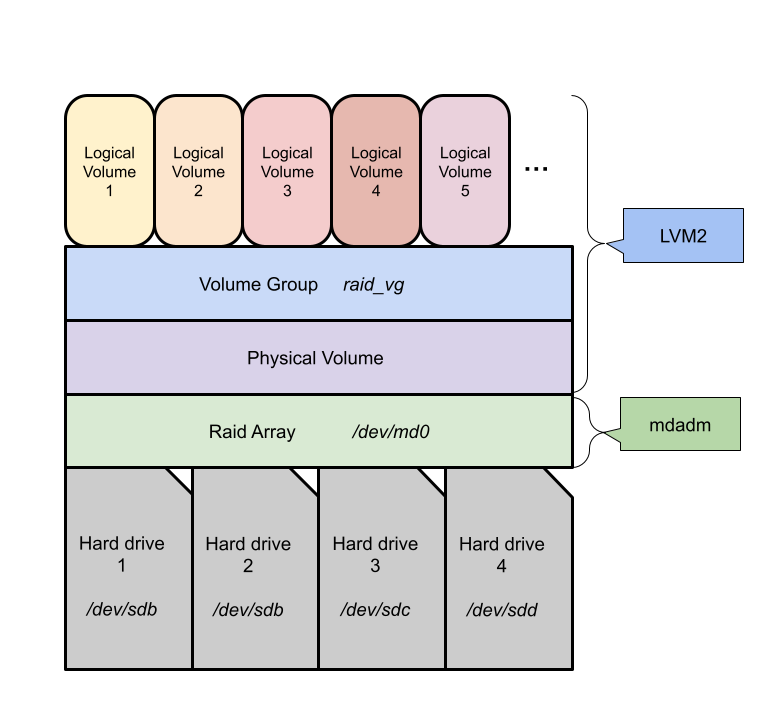

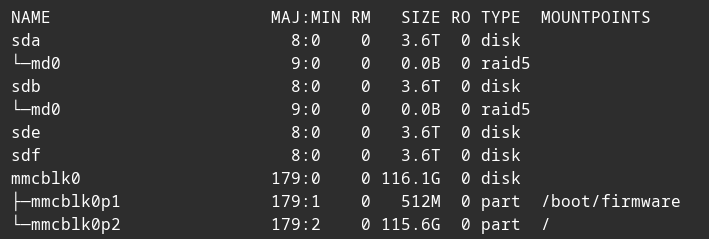

Actually setting up mdadm is pretty easy. The first step is identifying my drives. lsblk showed that I had one micro-SD card with my boot and root partitions and four 3.6TB hard drives sd[abcd] .

lsblkCreating the array can be done with

sudo mdadm --create --verbose /dev/md0 --level=5 --raid-devices=4 /dev/sd[abcd]and you'll know when its finished by checking /proc/mdstat

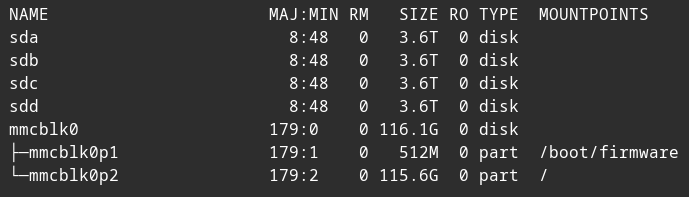

cat /proc/mdstatand..... it took 10 hours to complete, but that's fine. It worked the first time, right? Wrong!

Yeah – this part was a pain I will not lie. A little bit of background: when you have drives in a RAID with parity bits like mine, for every byte stored on three of the drives, the corresponding byte on the fourth has to be set to fix the parity of all the bits. That's how it gets the redundancy – if you lost one of those four bytes, you could deduce it by knowing that the parity has to be zero (or one, idk). Likewise, if one of those is changed, and the parity isn't fixed, the filesystem would detect that as and error and try to fix it. That's why when you create a RAID array, even though there is no data to write to the array, mdadm must write to all of the drive to sync the data on them so it doesn't detect that there's an error.

If all it was was that it would take half a day to write to drives, that'd be fine - set it up to run before bed and check it the next day. However, I kept running into this weird error where it would stop writing after ten or so minutes. For one, I could see on that the blinking activity lights on the SATA hat would stop blinking. Also, /proc/mdstat would stop saying it was re-syncing, but instead of having an active array, it was inactive. Further, running lsblk again would show two hard drives with an MD array sd[ab] and with two new drives sd[ef] with sd[cd] missing. Obviously, sdc and sdd were now the new drives sde and sdf , but the fact that the OS was losing track of the drives was concerning.

lsblk after the sync failure

I alluded to this is the last post – I first diagnosed this as a power supply issue. When I picked up the power supply the first thing I noticed is that it weighs like five ounces – maybe I was predisposed to thinking it would fail. The power supply was advertised as 12V 5A; I assumed it was able to give five amps for a time, but was failing to deliver peak voltage for a sustained period – say, while powering four hard drives writing continuously for eight hours. I though that under load, one or two of the hard drives went offline for a few milliseconds – long enough for the OS to disconnect it. Then, the power would stabilize and drive would reconnect reconnect and viola! now there's two new drives and two old drives missing. So, I got a second, 10A-max power supply replacement, plugged it in, started the sync... aaaaaaannnnd... the same issue. Turns out it was a driver issue all along. Lesson learned:

ALWAYS! CHECK! THOSE! LOGS!

The way I determined that there was a driver error was one line in the journal: sdc – the drive that had gone "missing" – had a kernel uas_eh_abort_handler warning message. A quick google of "uas_eh_abort_handler" points to this Unix and Linux StackExchange post and a few others where folks have USB storage devices disconnecting unexpectedly – Bingo! that's my issue. They also had a potential solution: downgrade to the usb-storage driver instead of UAS. Recall the SATA hat uses a SATA-to-USB bridge and the Pi's two USB 3.0 ports to connect the drives. Time to change some driver settings!

![A log file, the first line reads "sd 1:0:0:0 [sdc] uas_eh_abort_handler"](https://amystechcorner.com/content/images/2025/02/image.png)

journalctl at the time of drive disconnectUSB Storage Quirks

With a solution in mind, it was time to give it a try. The Linux kernel allows you to configure "quirks" about your USB devices and configure drivers accordingly. My USB device's quirk is that is needs to use the older USB-storage driver instead of the newer, faster, UAS driver. Following Steve Mitchell's guide that I found here, I added a configuration file to the kernel modules folder /etc/modprobe.d/usb-storage-quirks.conf with one line:

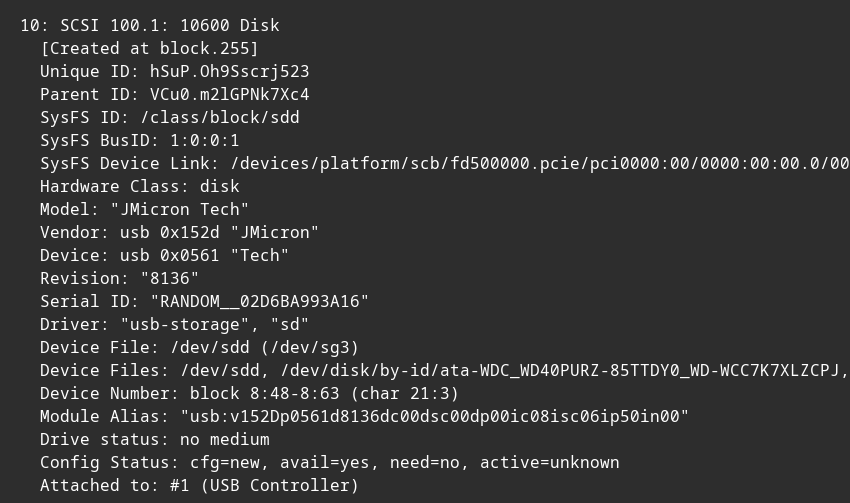

options usb-storage quirks=152d:0561:uOption usb-devices.quirks.u on the kernel parameters manual page set USB devices that match vendor code 152d and device code 0561 to not use the UAS driver and instead fallback on the USB-storage driver. The tricky part about setting USB quirks is that they are configured per type of device, and if you are following this guide for your own device - it's probably not 152d:0561. The way you determine the vendor and device code is with with the hwinfo --scsci command from the hwinfo package (there are a few other ways too, and it's good to double check - seriously go look at Steve Mitchell's post on this.) When I run this, I have four devices listed, each will look something like this:

hwinfo --scsci after switching the driver to usb-storageThere's a few things to notice: Indeed my hard drive is a disk from JMicron which I can see under hardware class and model. Right below that is the vendor and model codes I need for the USB storage quirks module parameters: 0x152d and 0x0561 respectively. You also can see the device driver is "usb-storage" instead of "uas" because I took this screenshot after I changed the setting.

Another thing to note is that I used the vendor and model codes of the storage devices themselves (my hard drives) not the USB bridges they're connected to. That tripped me up the first time I tried this and it did not change my storage driver.

Once that was squared away, it was time to put all the drives into a RAID array and this time it went without a hitch. Of note, this time it took 24ish hours to sync the drives instead of 10 since I'm using the older, slower USB-storage driver. It's a bit upsetting that I have to settle for degraded firmware, but since this is for slow, bulky storage, it's not a deal-breaker for me – and working drives is better than no drives. Also, Ethernet data transfer speeds are already much slower than USB, I'll hold off on testing the speed 'til the project is finished anyway.

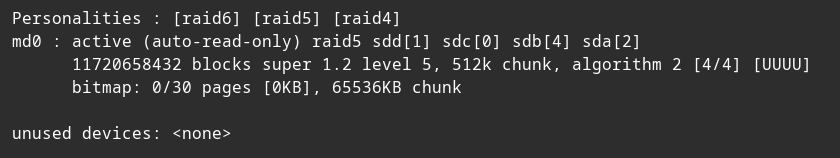

One day later, and /proc/mdstat looks like:

cat /proc/mdstatwith that beautiful, beautiful, "md0 : active" meaning I have a green-light to persist my MD-array after reboot by adding it to my /etc/mdadm/mdadm.conf file with

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.confThe next step is to connect this drives to my other devices with NFS over TLS, but that's for the next installment. I'll give you a quick preview – I did have to rebuild the Linux Kernel from source. It's always something...

Talk to you next time!